Recurring models (RNNs)

Neural networks can be divided into two main categories: Feed-forward and Recurrent Neural Network (RNN).

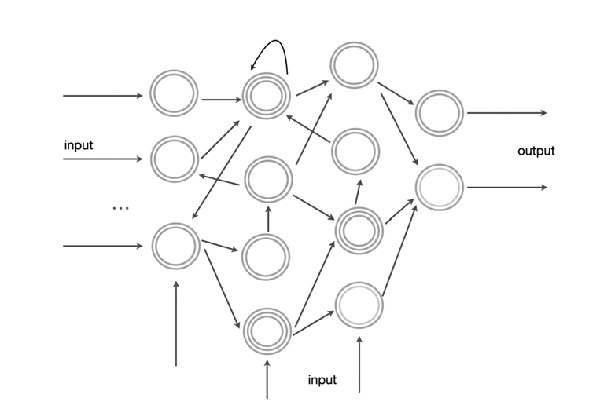

Feed-forwards are neural networks, where each input and its output move in only one direction, that means, there are no cycles or backward connections, nor between nodes of the same level. Which in contrast can occur in recurrent neural networks (RNNs).

RNN is a class of artificial neural networks used in prediction tasks, because it is able to analyze Time Series and predict future trends: e.g. the stock price, the trajectory of a vehicle, the next note in a melody and much more.

Generalizing, one of the peculiarities of Recurrent Neural Networks lies in the ability to work on sequences of arbitrary length, overcoming the limitations, in this sense, imposed by other structures such as Convolutional Neural Networks (CNNs) which, instead, impose fixed-length inputs.

Recurrent Neural Networks are able to work on:

- Sentences and text fragments

- Audio

- Documents

Allowing us to solve problems such as:

- speech-to-text

- Sentiment Analysis (sentiment extraction from phrases, e.g. reviews and social comments)

- Automatic translation

For example, RNN is a very valuable asset to be used in Natural Language Processing (NLP) problems.

In addition, RNN networks are surprisingly creative: they are able to pinpoint the next most likely musical notes in a melodic sequence. This can give life to real musical scores entirely written by Artificial Intelligence; an example is the project from Google’s Magenta Project that using Tensorflow, provides an ML framework for musical compositions.

https://magenta.tensorflow.org

A recurrent neural network is similar to feed-forward networks, except for the presence of a connection pointing brackward.

To better understand this structure, consider the simplest example of RNN: a single neuron that receives an input, produces an output, and sends it back to itself.

Among the RNNs that best implement this type of solution are the so-called Long Short-Term Memory Network (LSTM).

These are particularly suitable when we need to recognize natural language; It will be useful, in this case, to be able to go back in time, even a lot, for each term that we wish to recognize, in order to contextualize the topic at best.

LSTMs typically form chains of recurrent neural networks, each of which applies specific activation functions to filter out different characteristics of the input data, which can be either related to the current word to be predicted, or to those previously stored. We can also say that LSTMs “have long memory!”.

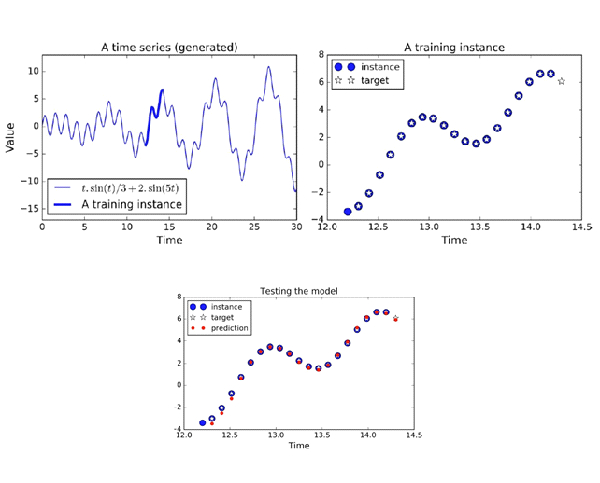

A particular use of RNN networks are time series.

A time series can be related to the trend over time: of a stock exchange, the temperature of an environment, the energy consumption of a plant, etc.

We can consider a time series as a function sampled in several time instants.

An example in Tensorflow-Keras of RNN for the prediction of a mathematical function (combination of sinusoids) can be viewed by clicking on the link below:

https://github.com/ageron/handson-ml2

Humanativa is currently working on two different projects regarding this topic:

- the first is developed on an analysis based on time series, to analyze the behavior of passengers in an airport and predict the number of those present in the various points (gates, shops, etc …) a week in advance, with the aim of improving airport quality and safety;

- the other is embodied in an analysis of the Sentiment of film reviews, using a neural network that uses LSTM methodologies.