MLOps – Governing Machine Learning Services

As for many of the processes that have had a principle of innovation at the base, Machine Learning is also facing a path from a perspective of experimentation to a service one (end-to-end process) according to a continuous process of maturity of the quality of its activities and their regulation (Machine Learning Maturity Model).

From a methodological point of view, three aspects of ML must be understood:

- Machine Learning is a governable process,

- it is considered a “standardized” process

- and is applicable in an industrial perspective.

In the current landscape, the entire Machine Learning community pushes to regulate above all the production and management of the re-training service, thus aiming to “redefine” the DevOps services applied to Machine Learning with greater awareness.

In Humanativa, our team of Data Scientists, also thanks to decades of experience in ML processes, deeply knows the characteristics of the “delivery” process ranging from the production of the ML Model to its publication and above all the re-training sub-processes ranging from monitoring the model in operation, to feedback of the results from production to its re-training.

In a MLOps perspective, there is obviously the need to evolve, the already consolidated mechanisms of IT Ops services, which govern software products from Test to Validation, to Versioning and Configuration Management, to Monitoring in operation.

On the positive side, it should be noted that, as every “era” of IT has seen a step forward towards its industrialization, this current era also goes in this direction. Nowadays, the evolution of IT with a view to Cloud services favors the introduction of MLOps processes because the major Cloud service providers (such as Amazon AWS, Google GCP and Microsoft Azure) are predisposed to standardize Cloud “processes” towards production and this also applies to Machine Learning services.

Definition of MLOps

To better explain, let’s give some definitions.

- MLOps: is the process of automating the deployment of Machine Learning (ML) applications and Monitoring the performance of ML models in production.

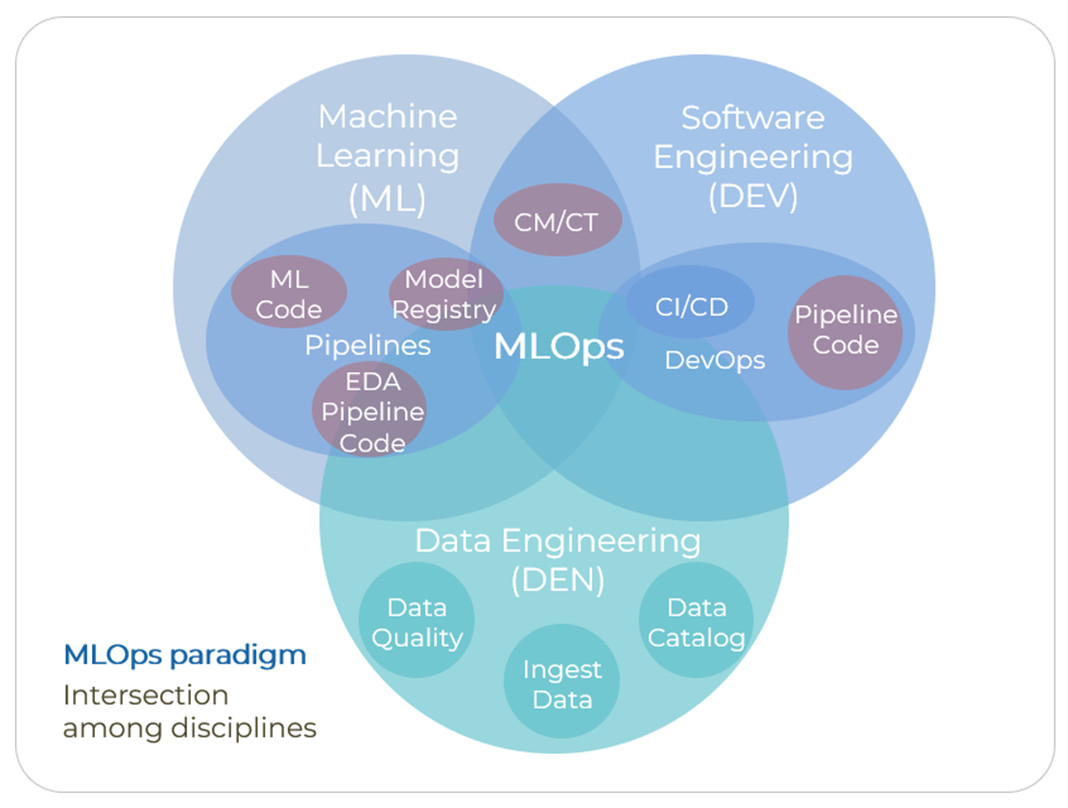

- MLOps, in addition to the continuous integration (CI) and continuous delivery (CD) required for DevOps, takes the DevOps process a step further by including continuous monitoring (CM) and Continuous Training (CT).

The figure shows how ML and its “artifacts” are integrated with Data Enginering and especially Software Engineering from an Ops perspective.

Why are MLOps important?

It is certainly the most delicate phase in terms of governance of ML Services.

Starting from our experience in the ML field and following the evolution of Data Science in terms of Maturity Model (ML), we can say that for Humanativa, MLOps means that:

- An ML Model is a “dynamic” object. The Customer must also be aware that an ML Model is not a static object, as can be an executable of a software program, but is a “dynamic” object over time, therefore subject to continuous revisions and adaptations (CM Continuous Monitoring and CT Continuous Training). If not, the ML Model could not re-adapt to changes in the data over time.

- Robustness and accuracy of the Model must be monitored over time through the quality of the results in operation, which can go “below threshold” due to events and changes in data over time.

To ensure the robustness of the time model, the entire “delivery” process of the Model must be as regulated, structured and automated as possible, up to the Data Visualization tools that are fundamental to explore the results and perceive the change over time.

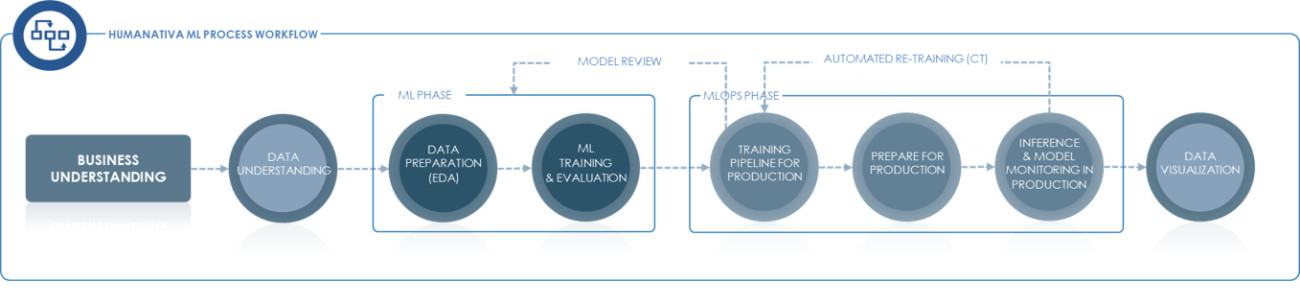

The figure shows the Humanativa Machine Learning workflow extracted from our HN ML Method methodological approach.

In other words, care must be taken to make the whole journey efficient and in a continuous cycle:

- From the construction of the Model and its “artifacts”,

- to the activities of Re-Training and Model Performance Validation, Deploy, Configuration Management, Model Registry, Versioning of pipelines and Models themselves,

- to the Model Inference activities (queries to the ML Model), Model Monitoring and Feedback of the results.

And finally in a continuous loop, either when the feedback shows that the model loses its accuracy in the results or shows changes in the data domain over time:

- It is necessary to streamline Re-Training activities via automatic pipeline,

- and if it is not enough to improve the results, then it is necessary to review the learning activities (Model Review) with refresh of the learning data, with new additional information (dataset enrichment) and therefore new analysis and updated versions of the Model to be brought back into operation.

In conclusion, starting with the awareness of having to provide a regulated end-to-end ML process, the further challenge toward Continuous Improvement of the Machine Learning Maturity Model consists of these two points::

- Auto-MLOps: on which points of the MLOps process to act to automate activities and make delivery faster and faster as data changes over time. Substantially reduce the latency of ML updates while ensuring the ML service at adequate response levels while reducing human intervention (the so-called “human-in-the-loop”) where possible.

- Machine Learning Cost Governance: How much does the ML process cost? How to monitor the ML process? How to evaluate the Return on Investment (ROI)? It is perhaps the most important point of this modern ML service vision, especially if we consider Cloud services, where cost management is complex.